Dear Editor*:

I was happy to see a meta-analysis by Silvia Lopes et al. (DOI: 10.1177/2050312119831116) comparing elastic resistance with ‘conventional’ resistance on muscle strength. In general, I believe the analysis was adequate; however, I noted several serious errors in the manuscript that must be addressed

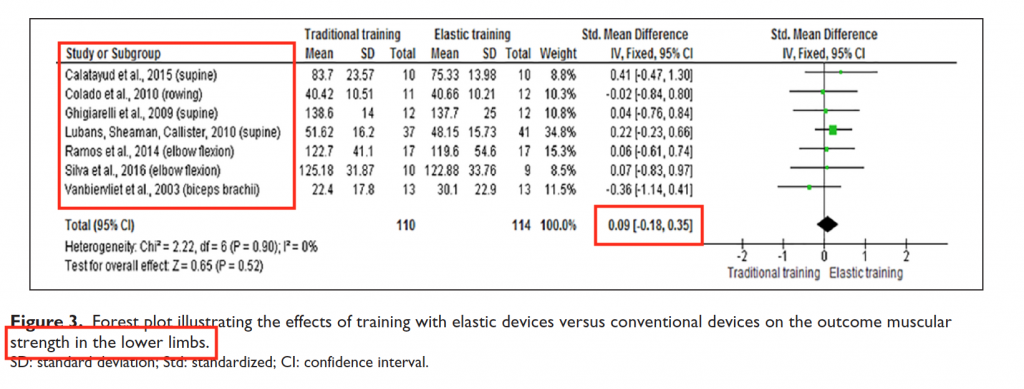

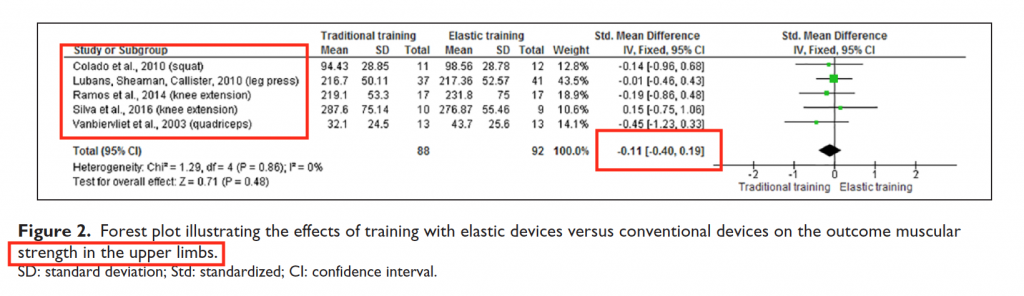

It appears that the legends of Figures 2 and 3 were switched. The forest plot of Figure 2 output contains data for lower limb outcomes, while the legend is labeled “upper limbs.” More importantly, the authors have reversed their results in the results section and abstract, providing upper limb SMD for lower limb SMD, and vice-versa. Based on the forest plots, it appears the correct results should be:

Upper Limb SMD = 0.09 (-0.18, 0.35) p = 0.5

Lower Limb SMD = -0.11 (-0.40, 0.19) p = 0.48.

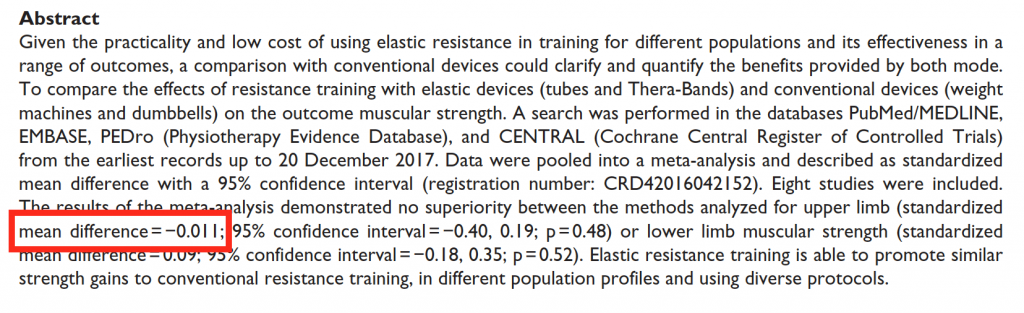

Unfortunately, the authors have also mistakenly reported SMD as 0.011 in the abstract, rather than -0.11.

The authors used a fixed effect model for meta-analysis because of homogeneity of the included studies “through the I2value”; however, no I2value was reported. Furthermore, the heterogeneity of the study samples (healthy and diseased cohorts included), length of studies, and muscle groups examined would likely warrant the use of a random-effects model to strengthen the validity of the analysis. In fact, the authors go on to note, “Regarding health, the sample varied from physically active individuals and athletes to individuals with coronary heart disease and moderate COPD.”

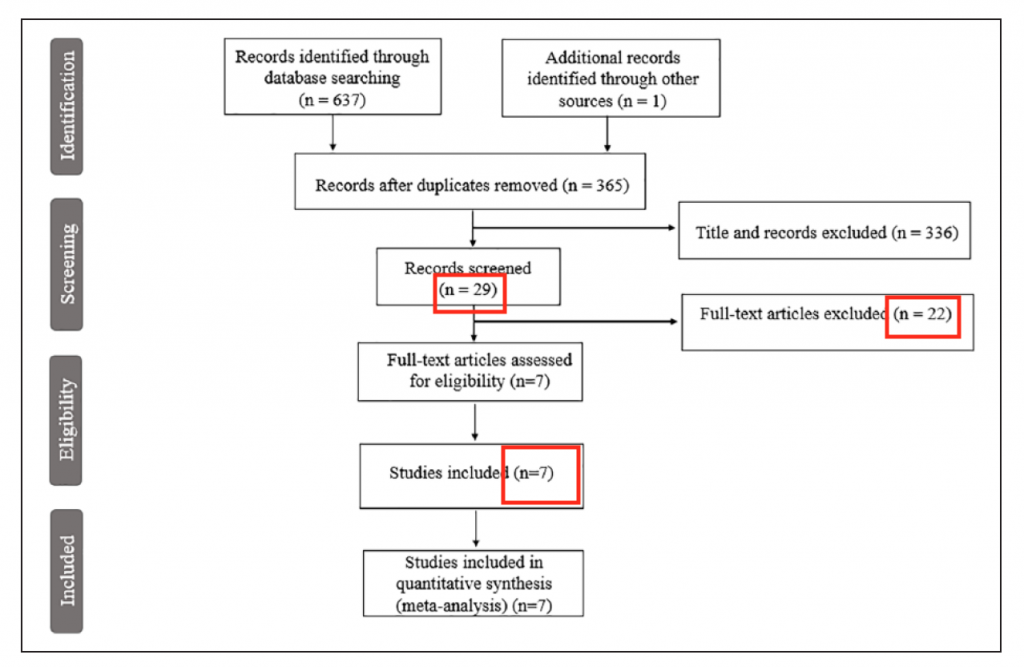

In the Results section, as well as the abstract, the authors stated that eight studies were included. When examining the characteristics of the studies, the authors only reference 7 studies from their bibliography (1, 2, 3, 4, 6, 7, 8), apparently having dropped reference #5 (Webber et al. 2010) which was not included any analysis of the results through the forest plots in Figures 2 or 3.

The PRISMA chart in Figure 1 reveals more inconsistencies compared to the text. In addition to showing 7 studies (rather than the stated “eight” in the text) included in the final analysis, Figure 1 indicates that 29 were screened and 22 excluded; however, the text indicates the authors screened 23 and excluded 15.

Under Methodological Quality Of Included Studies, the authors identified the number of studies with certain characteristics; however, the superscripts are not consistent with the text. For example, the authors state that ‘four’ studies scored “7”, but they superscripted only 2 references.

In the Discussionsection, the authors note that references 16-18 compared elastic resistance to a control group; however, only 1 of these review studies focused on elastic resistance (reference 16); the other 2 reviewed all types of resistance training, including elastic resistance.

As a journal editor, I was very disappointed to see these errors published in this pay-for-publish open access journal. These obvious errors should have been noted in peer review, which leads me to question if adequate peer review even occurred.

I assume the authors paid the $1125 fee to have their article published. In today’s world of predatory journals, these types of errors indicate the possibility of poor editorial and peer review process, leading to numerous critical errors that affect the article’s validity. It’s unfair for readers to be presented with such an erroneous publication. Busy clinicians reading these articles are often limited in time, and they must often trust that the publication is free of errors to ensure validity.

Meta-analytical designs are generally rated as the “highest” level of evidence: “level 1” evidence. However, the level of evidence should always be limited by the quality of the paper. Unfortunately, the errors in this paper go beyond quality assessment.

A quality assessment tool may not have even identified these errors. These errors are generally detected only upon focused review of the paper, which should have been done during the editorial and peer review process. Your readers should not have to bring these errors to your attention. This paper is an example of the importance of reading the full publication rather than relying on the abstract alone (which in this case was also wrong).

I applaud the authors for completing this research, and I doubt these errors change the outcome of the analysis, as we have noted similar findings of no difference in EMG activity between elastic and isotonic resistance1. However, the authors and journal editor have a responsibility to correct these errors immediately (as well as the Pubmed abstract) and ensure their conclusions remain valid. Furthermore, I suggest the journal editor should review their editorial process to ensure these mistakes do not occur again.

Phil Page, PhD, PT, ATC, LAT, CSCS, FACSM

1. Aboodarda SJ, Page PA, Behm DG. Muscle activation comparisons between elastic and isoinertial resistance: a meta-analysis. Clinical Biomechanics. 2016. 39:52-61.

*This is a letter to the editor of SAGE Open Medicine, an open-access, pay-for-publish journal. Ironically, my letter has to go through peer review before considering for publication. And as of now, there is no fee for publishing letters to the editor.

UPDATE September 4, 2019: After inquiring about the status of my ‘peer review’, the journal informed me that they have not been able to find a suitable reviewer for my letter to the editor. Not sure why the editor just can’t review it. Isn’t that their job? And I guess the longer this article stays out there with all the errors, the more people will believe the results?