Today’s approach to “evidence-based practice” (EBP) should be a process rather than an end-point. In this article published in the Journal of Performance Health, I recommend a 6-step process for a pragmatic approach to EBP….beginning with asking the question and ending with analyzing the results: AAAAAA.

The AAAAA’s of Pragmatic EBP

Want some help to better utilize research in your practice? Need to get ‘up-to-date’ on the latest research? Are you frustrated or confused, or simply scared of the process? If you have a clinical question and need some direction in finding the answer in the scientific literature, just ask the Fonz, and he’ll say “AAAAAA!”: Ask, Acquire, Analyze, Answer, Apply, and Assess

ASK

What is your question? Be very specific in what you want to know. The more specific, the better. Broad questions will lead to information overload; but being too specific may narrow your results too much. So, striking the right amount of detail in your question is important. And it might take some tweaking along the way, either adding or subtracting details from your question. Specifically, think about keywords related to your question—that’s how most information is categorized now—and provide keywords to these categories:

- population

- body part

- disease or injury

- intervention

- outcome

For example, if your question is, “Will kinesiology tape help reduce swelling in patients with lymphedema after breast surgery,” you might consider these keywords to use:

- population = females

- body part = upper extremity

- disease or injury = breast cancer, lymphedema

- intervention = kinesiology taping

- outcome = swelling

Remember, you might need to add some details along the way such as an age range for your population, or other outcomes such as range of motion.

ACQUIRE

Now that you have your question and keywords, it’s time to search the literature. The Internet offers so many easily accessible resources today; however, you need to know where to look and what to trust. Since one source typically doesn’t provide all the information you’re looking for, don’t rely on only one source for all your information; however, you’ll probably want to limit your search to 2 or 3 reputable sources.

Finding the articles

Remember that different sources have different ‘levels’ of information that can range from original research articles you’ll need to interpret (like raw data), to more “consumerized” articles that may or may not provide sufficient or even credible information. Obviously, you want to have a good scientific basis and evidence for your answer, but you want to gather and interpret the information in an efficient manner. Here are some good freesources for you instead of simply entering your keywords into Google search bar. Note: there are research databases such as CINAHL and SportDiscus that include journals that might not be indexed in Pubmed; however, these databases often require access through a university library.

- Pubmed: The “Granddaddy” of online scientific searching contains the most reputable database of research papers. You can enter keywords with Boolean searches (using “AND”, “OR”, “NOT”) to limit your results. You can also filter your results, limiting them to clinical trials or reviews for example. Sometimes, meta-analyses and systematic reviews have already been done to answer your question, so look for those first by filtering for “Reviews” in Pubmed. And if you create a free account, you can save your searches and create alerts of new articles using keywords. Unfortunately, you will only have access to the abstract; however, some full articles are provided for free. And note that Pubmed does not index allrelevant journals! (www.pubmed.com)

- PEDro: The free Physiotherapy Evidence Database contains over 37,000 randomized trials, systematic reviews, and clinical practice guidelines from around the world. (www.pedro.org.au)

- Google Scholar: Focus your Google search to research articles by using Google Scholar’s free search. (scholar.google.com)

- Industry websites: Industry-sponsored websites often contain research specific to their products. While there is a potential for publication bias in these sites (but not in TheraBandAcademy.com!), you may quickly find research to help answer your question. Other sources to find answers may include industry association websites like www.apta.orgor www.acsm.org, which may publish position statements or clinical summaries.

Narrowing the results

When you start to comb through the search results, start by looking at the title. If the title is relevant to your question, then read the abstract. If the abstract is relevant to your question, regardless of the conclusion, save the abstract/article. You can print them, copy and paste, or save within your PubMed account, for example. Next, you’ll want to get access to the full articles.

Unfortunately, many full articles are difficult to attain without university library privileges. Aside from purchasing the full article directly from the publisher website at a hefty price (usually $20-40), there are a few things you can try.

- Professional organizations like the APTA sometimes provide free access to their online journal databases for members (www.ptnow.org)

- Type the title of the article you’re looking for in Google to see if the full article is accessible

Staying ahead of the curve

At this point, you might want to set up email “alerts” on your topic if you want to know when the very latest research comes out. There are several ways to do this:

- Set up Pubmed alerts using keywords

- Sign up for relevant journal table of contents alerts for new issues and articles at their home pages

- Use a service like www.AMEDEO.com to send you new article titles from journals

- Use an app like QxMD and select the categories and alerts you want

Once you have access to the full article, you can start to review it. You can often find more articles by ‘cross-referencing’ the cited articles in a study as well. Ideally, you should take time to critically review the full article if possible; never rely on the title or abstract conclusion alone. Without analyzing the full article, you will not know if the study is applicable to your question

ANALYZE

Once the title or abstract piques your interest, it’s time to further analyze. Let’s start with the anatomy of an abstract or article. (Note: While all research articles should have abstracts, not all abstracts have a research article!). Articles and abstracts generally follow an outline that may or may not include these 5 sections:

- Background & Purpose

- Methods

- Results

- Discussion

- Conclusion

Now let’s examine the contents of the different sections.

Background & Purpose: Authors should provide appropriate background information that supports the rationale for the study, leading to the research question and/or hypothesis. The purpose of the study should be clearly stated. Sometimes, researchers use “null hypothesis” testing to establish statistical significance.

Methods: The study design should be identified here, and should address the research question/purpose (more on that later). The key items to look for in the methods section of clinical trials are known as “PICO”: Population, Intervention, Comparison Group, Outcome. Note that other study designs may not include these specifically. The statistical analysis procedures should be identified at the end of this section. Should follow reporting guidelines at www.equator-network.org

Results: This section provides the specific results of the statistical analysis, quantifying the outcome measures. This might include averages and statistical significance. Look for clinically-relevant outcomes such as effect sizes, confidence intervals, and minimal clinically important differences.

Discussion: The authors should address the results and provide context relative to the purpose. Limitations and threats to internal and external validity (bias) should be addressed here as well. Clinical implications and need for further research should also be mentioned.

Conclusion: The conclusion should answer the research question and be consistent with the findings within the context of the study limitations.

Now that you have a feeling for the anatomy, let’s get down to the analysis. In order, these are the things you should consider when analyzing an article.

- The research design & level of evidence

- Quality of the research, including bias

- Impact of the evidence (clinical statistics)

- The source

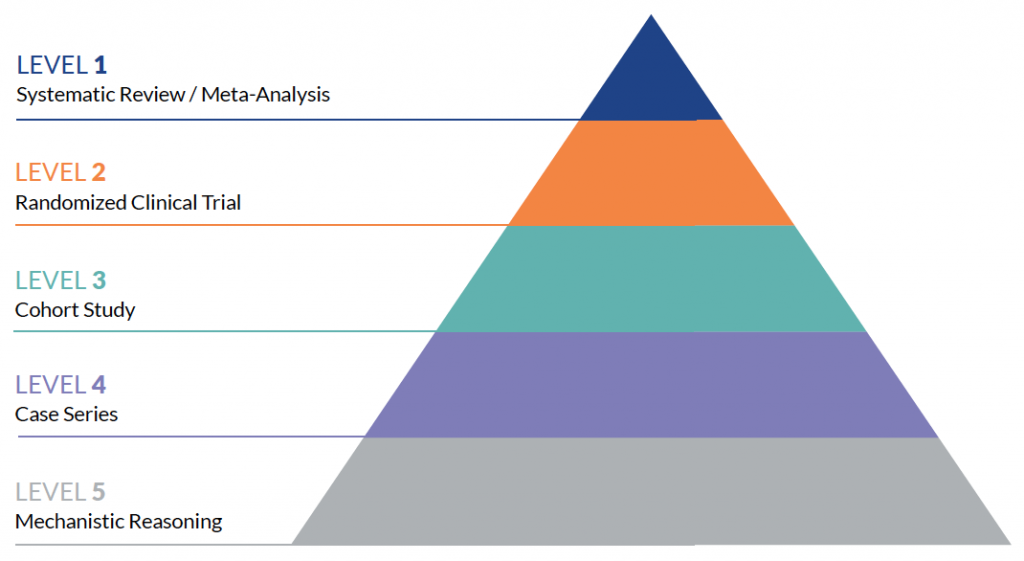

Level of Evidence

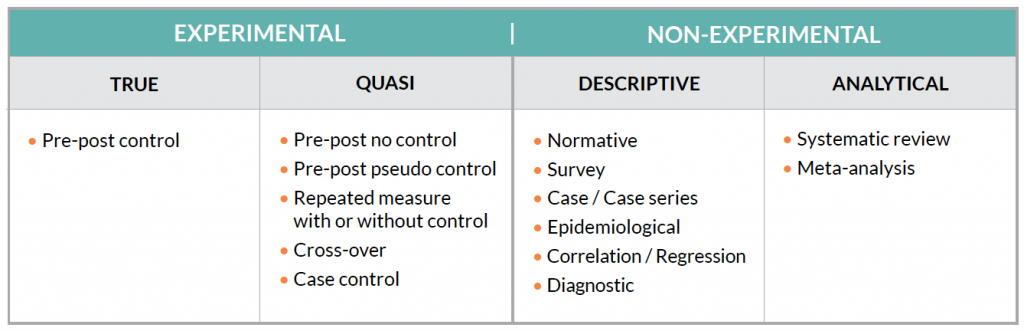

Research articles come in all shapes and sizes. In other words, there are several study designs that are used to answer a research question. In general, there are 2 types of research designs: experimental and non-experimental. Each type has sub-types with various designs:

These different designs have different “levels” of evidence as well. The Center for Evidence-Based Medicine (www.CEBM.net) provides 5 hierarchies of evidence. The 2011 levels are designed as a “short-cut for busy clinicians, researchers, or patients to find the likely best evidence.” In other words, certain study designs are stronger than

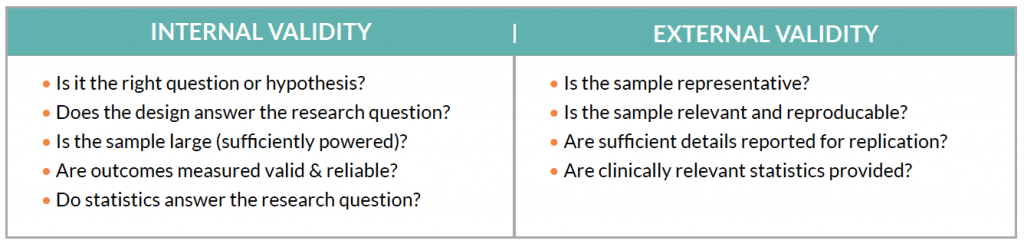

Quality

The quality of the evidence is as important (if not more) than the level of evidence. A poorly designed or reported randomized control trial may be of little benefit in clinical practice. Unfortunately, this requires clinicians to understand how to identify potential bias within studies that may influence the results, or bias that may influence the clinical implementation of the study. This is known as threats to internal and external validity, respectively.

Unfortunately, there is an inherent weakness in clinical research: “sterility.” While clinical populations are often heterogeneous, researchers must create narrow and focused parameters of subject populations (homogenous) to control for potential bias. This effort to maximize internal validity may compromise external validity. On the other end of the spectrum, some researchers lump patients with the same clinical diagnosis together, regardless of etiology, which may influence outcomes. For example, in a population of patients diagnosed with “anterior knee pain,” a hip strengthening intervention may be more effective in patients with hip weakness, compared to patients with quadriceps weakness…even with the same diagnosis.

Busy clinicians can assume studies possess internal validity if the full article is published in a highly credible journal source. While some amount of bias is expected in research, it’s important that the authors do their best to minimize within the design, identify the potential for bias in the study, and discuss how bias may influence the study results or implementation.

While overall quality is associated with internal validity, there are several scales and tools used to assess other aspects of research bias in different study designs. One of the most commonly used in clinical rehabilitation trials is the “PEDro” scale. With PEDro, studies are graded on 11 criteria such as blinding and randomization. Other scales combine the quantity, quality, and level of studies to make overall recommendations, such as the GRADE scale.

Impact of the Evidence

Remember that scientific research and statistical significance is based on probability, and that statistical significance really doesn’t have much value for clinical interpretation. The P-value only gives us the probability that the researcher made the right decision in accepting or rejecting the null hypothesis. We must make certain assumptions to apply the results of a study to other individuals in a population (such as a representative sample). Yet, as clinicians, we know that every patient and situation is different. 100% of patients don’t have successful treatment in real life or in studies, so there’s no guarantee that the results of one study will be seen in your clinical population.

Since statistical significance is of little value in a clinical setting, clinically relevant statistics are now recommended for reporting clinical study results. A statistically significant outcome may not have clinical relevance. For example, a pain study may report that one group significantly reduced their pain over another group; however, the difference was only 1 point out of 10 on the Visual Analog Scale. Depending on the diagnosis, a difference of 2 may be more beneficial for patients. This number is known as the “minimal clinically important difference” or MCID, and these are specific to the outcome measure and the population.

Another disadvantage of statistical significance is that the P-value does not give us information on the size of the difference between groups (magnitude) or the direction (harm vs. benefit). Effect sizes can quantify the magnitude of the difference between groups and provide direction on harm or benefit. A large effect size is often seen as clinically useful, while a smaller effect is seen as “trivial.”

Finally, confidence intervals provide more clinically-relevant information, including an estimated point and range of the true value of a parameter within a population. Confidence intervals can be used to estimate effect sizes or outcome values. That’s the beauty of confidence intervals. We can predict with a high level of confidence (usually 95% confidence) that the “true” value of an outcome lies within a certain range.

Unfortunately, some studies do not provide effect sizes or confidence intervals; however, there are several online tools to help you estimate these values if the study provides the necessary data. Using clinically-relevant statistics can help improve your interpretation of study results.

Source of Evidence

Finally, consider the source. Is there a full article available with sufficient detail provided for analysis and replication? Research abstracts such as those presented at national meetings should have less influence on answering your question than full length articles. Also consider the publication. High impact, indexed peer-reviewed journals with a blinded review process are considered the best source. Secondary sources such as textbooks and trade magazines offer less quality sources.

Do not rely on the journal name to establish credibility; there are many ‘predatory journals’ with fancy titles that offer a ‘pay to publish’ scheme, often for poor quality studies. And, even indexing in the most prestigious Pubmed does not guarantee the quality of a journal or article!

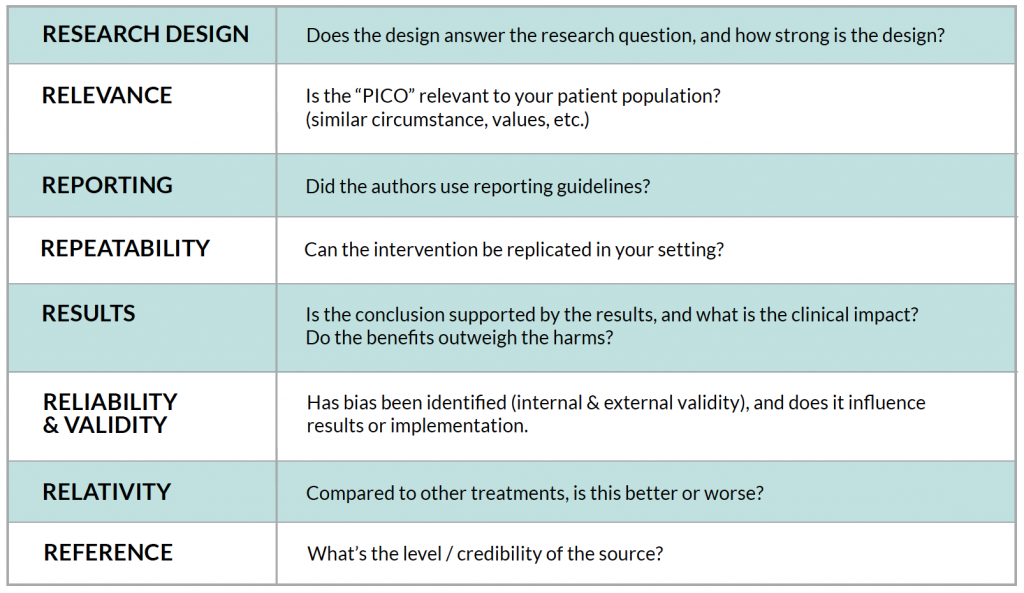

In summary, try to answer these “R” questions when analyzing an article to answer a clinical question:

Being an informed research consumer

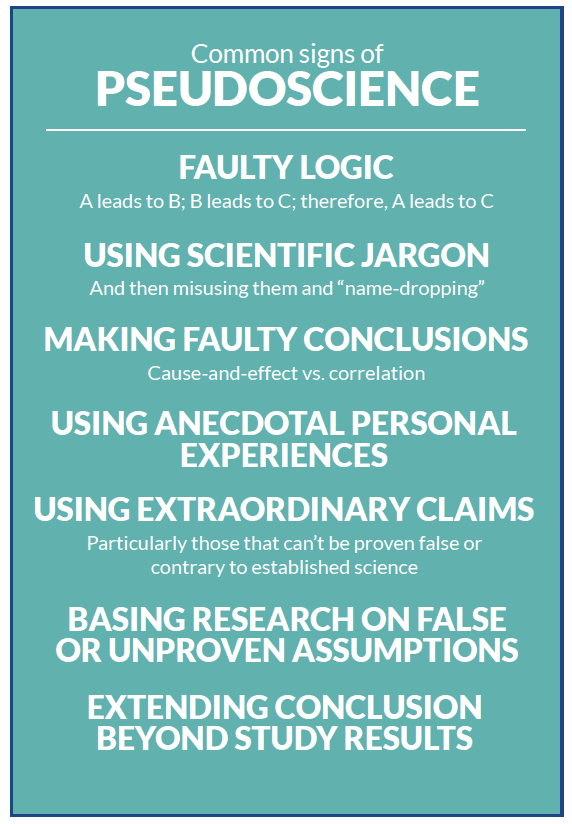

Researchers sometimes hide bias in their study, which may not be obvious to the typical clinician. More often, the media, manufacturers, and so-called “gurus” exhibit bias in their reporting though the subtle art of “pseudoscience.” Unfortunately, some gurus and others use the words “research” and “science” inappropriately to support their message. Because of this, clinicians should be skeptical of claims without being cynical, both in and outside of research. Here are some common signs of pseudoscience :

Today, many companies are using ‘research’ to support their marketing efforts. Don’t just take their word for it: you need to ask companies to back up their claims with solid evidence. According to the Federal Register, the FDA offers guidance on the research that companies can base their medical device claims, using “adequate and well-controlled” studies:

- Clear statement of objective and methods

- Valid design (only level 1-3) with control (blinded and randomized)

- Subjects have disease or condition

- Methods are well-defined and reliable

- Appropriate statistical analysis for effects

ANSWER

Now you’ve gathered and analyzed the evidence…. What’s the answer to your question? It may not be as obvious as you think. There are 2 questions to consider when you read a research paper: is the answer to the research question in each study appropriate; and, what’s the answer to your clinical question. Sometimes, your answer will already be in a meta-analysis, which generally pools data from various quality studies. But meta-analyses have limitations: date of publication, inclusion/exclusion criteria limitations, etc. As with anything, your clinical decision should not lie with only one study, since evidence-based practice combines patient presentation and clinician experience, but be sure you have the best available evidence first!

What if you have 10 studies on your topic that have different conclusions? If you are faced with multiple studies on an intervention with different outcomes, look for reasons why they may be different; specifically, PICO. And of course, look for overall bias in each study and attempts to minimize it.

- Population: Compare inclusion/exclusion criteria and recruitment.

- Intervention: Were treatment parameters clearly reported and similar

- Comparison: Was the comparison treatment (control) truly a control?

- Outcome Measures: Were similar tools used to measure the same thing, such as balance?

APPLY

This section is easy: put your answer into practice. Using the parameters of evidence based practice, if you’ve analyzed the best available evidence, combine it with your clinical experience and the patient presentation. If it’s a clear answer (ie, Yes or No), then great. If not (ie, inconclusive or conflicting), use your clinical judgement.

There are a few factors to consider when deciding to apply your findings:

- Harm vs. Benefit: Does the benefit of the outcome outweigh potential harms, potentially in comparison to the standard treatment?

- Cost-Benefit: Does the benefit of the outcome outweigh the cost of implementing the treatment?

- Feasibility / reproducibility: Can the treatment be implemented in your setting?

Don’t forget to share your findings with your colleagues. Having a journal club or other research meeting regularly helps facilitate evidence-based practice to support clinical decision making.

ASSESS

Lastly, you should assess the impact of your clinical decision. Was your answer correct for your situation? How beneficial was your decision? Researchers have developed a system for quantifying that: RE-AIM (www.re-aim.org). Specifically, RE-AIM quantifies:

- Reach: how many benefited from the intervention in the target group?

- Effectiveness: how effective was the intervention (in measurable outcomes)?

- Adoption: how many individuals went on to continue using the intervention regularly?

- Implementation: how consistently is the intervention performed, and how costly?

- Maintenance: How did the intervention affect individuals over time?

For example, if your clinic decides to use a new kinesiology taping technique in patients with lymphedema after breast cancer surgery, use the RE-AIM model to assess how well the intervention worked in patients, as well as in your clinic. RE-AIM can assess how well the intervention was adopted and implemented within clinicians as well as patients. By quantifying its success, you not only support the use of an evidence-based intervention, but you may also have data specific to your clinic to support marketing efforts in the community!

Staying up-to-date with the literature can be very time-consuming for the busy clinician. The “6A” approach helps provide an efficient framework to facilitate the process of answer clinical questions with research to support evidence-based practice.

Do you have any questions on how to improve your clinical decision making with research? Post them below or contact me!